|

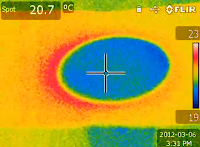

| Figure 1 |

One of the most fascinating parts of science is the search for answers to strange phenomena. In the past nine months, I have posted more than fifty IR videos on

my Infrared YouTube channel. These experiments are all very easy to do, but not all of them are easy to explain and some of them appear to be quite strange at first glance. In this article, I will try to explain

one of those experiments, with one of my other skills -- molecular simulation.

Warming surprise

This incredibly simple IR experiment is about putting a piece of paper above a cup of room temperature (nearly) water (Figure 1). I hear you saying, what is the big deal of it? You have probably done that several times in your life, for whatever reasons.

If you happen to have an IR camera and you watch this process through it, you may be surprised. Many of you know that water in an open cup is slightly cooler ( 1-2°C lower) than room temperature because of evaporative cooling: constant evaporation of water molecules from liquid water takes thermal energy away from the cup and causes it to be a bit cooler than the room temperature (which is why you feel cold when you just step out of a swimming pool). You may think that the paper would also cool down when you put it on top of the water because at room temperature paper is a bit warmer than the water in the cup and, based on what your science teacher in high school has told you, heat would flow from the warmer paper to the cooler water, causing the temperature of the paper to drop a bit.

But the result was exactly the opposite -- the paper actually warmed up (Figure 2)! And the warming appeared to be pretty significant -- up to 2°C could be observed in a dry winter day. I don't know your reaction to this finding, but I was baffled when I saw it because this effect first appeared to be a violation of the

Second Law of Thermodynamics (which, of course, is impossible)! In fact, the reason I did this experiment at that time was to figure out how sensitive my IR camera might be. My intention was to exploit the evaporative cooling of water to provide a small, stable thermal gradient. I was examining if the IR camera could capture the weak heat transfer between the water and the paper.

I quickly figured out that the culprit responsible for this surprising warming phenomenon must come from the water vapor, which we cannot see with the naked eye. But what we can't see doesn't mean it doesn't exist. When water molecules in the vapor encounters the surface molecules of the paper, they will be captured (this is known as

adsorption). When more and more water molecules are captured and condense onto the paper surface, they will return to the liquid state and, according to the

Law of Conservation of Energy, release the excessive energy they carry, which causes the paper to warm up. In other words, the paper somehow recovers the energy that the water in the cup lost through evaporation. As you can see now, this is a pretty delicate

thermodynamic cycle that connects two phase changes, evaporation and condensation, in two different places and their latent heats. The physicists among us would appreciate if I say that this shows entropy at work: evaporation is an

entropic effect caused by water molecules wanting to maximize their entropy by leaving their more organized liquid state. The interaction between the vapor molecules and the paper molecules acts to reverse this process by returning the water molecules to the condensed liquid state and a certain amount of net energy can be extracted from this (known as

the enthalpy of vaporization). Of course, the adsorption process itself, caused by the hydrogen bonding between water molecules and paper molecules, releases some amount of heat that contributes to the warming effect as well.

|

| Figure 4: Sensor results. |

At this point, I hope you have been enticed enough to want to try this out yourself. If you don't have an IR camera, you can use a temperature sensor or an IR thermometer as a substitution to observe this phenomenon (undoubtedly, nothing beats an IR camera in terms of seeing heat -- with a point thermometer you just need to be patient and be willing to do more tedious work).

But wait, this is not the end of the story!

Dynamic equilibrium

If you keep observing the paper, you will see that this condensation warming effect will diminish in a few minutes (Figure 3). This trend is more clearly shown in Figure 4 in which the temperature of the paper was recorded for ten minutes using a

fast-response surface temperature sensor. What the heck happened?

The answer to this question can be illustrated using a

schematic molecular simulation (Figure 5) I designed to explain the underlying molecular physics (in that simulation water molecules are simplified as single round particles). After water molecules condense onto the paper surface, a thin layer of condensate will form. When it becomes thick enough, water molecules will evaporate from it, too, just like from the surface layer of water in the cup. When the rate of evaporation equals the rate of condensation, there is no more net warming: The condensation warming and evaporative cooling will eventually reach a "break-even" point. Reaching this equilibrium state doesn't mean that condensation and evaporation on the surface of the paper will stop. In fact, water molecules will keep condensing to the layer and evaporating from it. This is known as "

dynamic equilibrium." If you move the paper, you will break this dynamic equilibrium. Figure 6 shows a pattern in which evaporative cooling and condensation warming occurred simultaneously on a single piece of paper after the paper had been shifted a bit. In Figure 6, evaporation dominated in the blue zone that was shifted out of the cup area, condensation dominated in the white zone that was shifted into the cup area, and the overlap zone in the middle remained close to the equilibrium state because it was the zone that still remained inside the cup area -- so business as usual.

As you can see,

there is a lot of science in this "simple" experiment! But nothing we have done so far requires expensive materials or supplies. Everything needed to do this experiment is probably within the reach of your arms if you are reading this article at home (and you happen to have a digital thermometer, or better, an IR camera, nearby). If you are an educator, this experiment should fascinate you because this will be a perfect inquiry activity for students. If you are a scientist, this experiment should fascinate you because what I have shown you is in fact an

atomic layer deposition experiment that anyone can do --

some Fermi calculation suggests that the thickness of the layer is in the nanometer range (only a few hundred layers of water molecules or 1/10,000th of the diameter of your hair).

What we are seeing is in fact a signal from the nanoscale world! Isn't that cool?

Does our story end now? Absolutely not. The new questions you can ask are practically endless if you keep "

thinking molecularly." The following are six extended questions I have asked myself. You can try to explore all of these without leaving your kitchen.

When will the paper cool down?

Returning to the original purpose of my experiment (looking for cooling due to heat transfer), can we find a situation in which we will indeed see cooling instead of warming? Yes, if the water is cold enough (Figure 7). When the water is cold, the evaporation rate drops. There will be less water molecules hitting the surface of the paper. The energy gain from weaker condensation warming cannot compensate the energy loss due to the heat transfer between the paper and the cold water. (By the way, I think the heat transfer in this case is predominantly radiative, because air doesn't conduct heat well and natural convection acts against heat transfer in this situation.)

What if the paper has been atop the water for a long time?

If you leave the paper atop the cup of water (water slightly cooler than room temperature, not ice water) for a few hours and you come back to examine it, you would probably be surprised again: The paper is now cooler than room temperature (Figure 8). I wouldn't be surprised if you are totally confused now: This warming and cooling business is indeed quite complicated -- even though everything we have done so far has been limited to manipulating paper and water. To keep the story short, I will tell you that this is because water molecules have traveled through the porous layer of the paper through

capillary action and shown up on the other side of the paper (this molecular movement is often known as

percolation). Their evaporation from the upper side of the paper cools down the paper. The building science guys among us can use this experiment to teach moisture transport through materials. Can the temperature of the upper side be somehow used to gauge the

moisture vapor transmission rate (MVTR) of a porous material? If so, this may provide a way to automatically measure MVTR of different materials. The American Society for Testing and Materials already has established a

standard based on IR sensors. Perhaps this experiment can be related to that.

Different materials have different dew points?

Do water molecules condense to other materials such as plastic? We know plastic materials do not absorb water (which is why they are good vapor barriers). If plastic materials are not cold enough, water molecules do not condense to them

. Figure 9 shows this difference by using a piece of paper half-covered by a transparency film taped to the underside. Warming was only observed in the paper part, indicating water molecules do not condense to the plastic film. This experiment raises an interesting question: The so-called

dew point, the temperature below which the water vapor in the air at a constant barometric pressure will condense into liquid water, may not be an entirely reliable way to predict condensation. Condensation actually depends on the chemical property of the material surface. Hydrophobic (water-hating) materials like plastic tend to have a low dew point, whereas hydrophilic (water-loving) materials tend to have a high dew point. The

porosity of the material should matter, too, because a more porous material will provide a large surface for interaction with water molecule -- paper happens to be such a material because of its fiber texture.

Vapor pressure depression

What will happen if we add some salt (or baking soda or sugar) to the water? Figure 10 shows that the condensation warming effect becomes weaker. For our chemist friends, this is known as

vapor pressure depression. The salt ions do not evaporate themselves, but their presence in a solution somehow slows down the evaporation of water molecules.

A vapor column?

What will happen if the paper approaches the water from a different angle such as in the vertical direction? How does the shape of the water vapor distribution above a cup of water look like? Does it look like a steam from a cup of coffee? Figure 11 could probably give you some clue.

What about alcohol?

So far we have used only water. What about other liquids? Alcohol is pretty volatile. So I tried some

isopropyl alcohol (91%). Once again, I was baffled. Our experience with applying rubbing alcohol to our skin says that alcohol cools faster than water. So I expected that when the isopropanol molecules condense, they would release more heat. But this is not what Figure 12 suggests! Given the fact that the enthalpies of vaporization of alcohol and water are 44 and 41 kJ/mol, respectively, the only sensible explanation may be that the warming effect is not only due to the condensation of the vapor molecules, but also the interaction between the vapor molecules and the cellulose molecules of the paper. If the interaction between an alcohol molecule and a cellulose molecule is weaker, then the adsorption rate will be slower and the adsorption of the alcohol molecule onto the paper surface will produce less heat. I don't know how to prove this now, but this could be a good topic of research.

Concluding remark

Even if this is a lengthy article (and thanks for making it to the end), I am pretty sure that the scientific exploration does not stop here. There are other questions that you can ask yourself. For me, I have been intrigued by the fascinating thermodynamic cycles in a humble cup of water and have been wondering if they could be used to engineer something that can harvest the latent heats of evaporation and condensation. In other words, could we turn a cup of water into a tiny power plant to, say, charge my cell phone?

In theory, this is possible as any temperature gradient, however weak it may be, can be translated into electric current using a thermoelectric generator based on the Seebeck effect. The evaporation of water molecules from an open cup is a free gift of entropy from Mother Nature that ought to be harnessed some day.